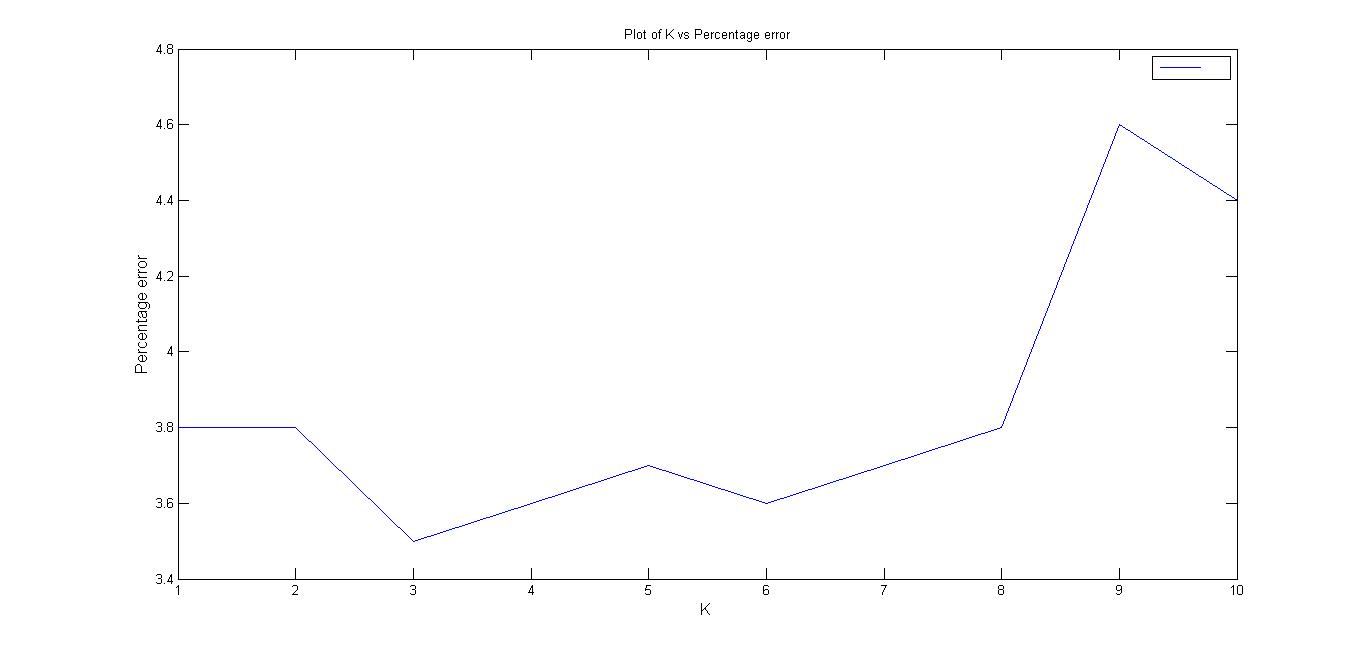

Least error obtained for k=3

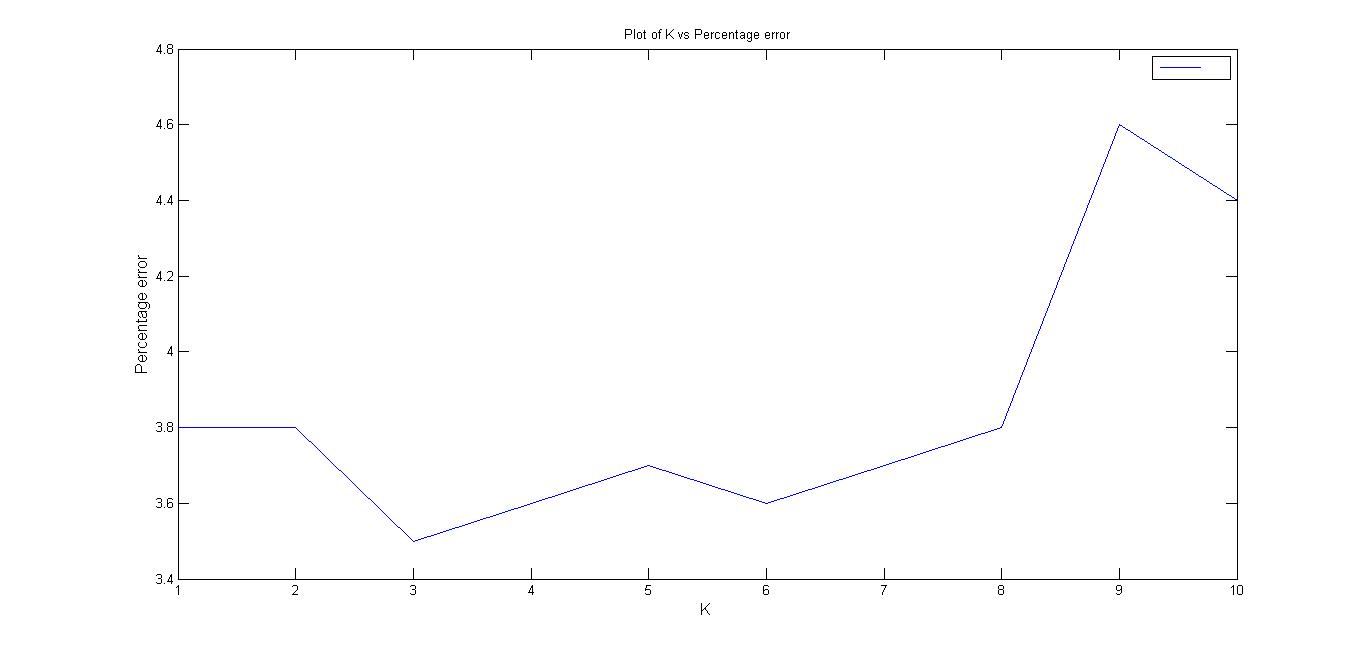

Least error obtained for k=3

From the graph of 'k' versus 'percentage error on test set' we notice that the percentage error first decreases with increase in k value.But the graph takes a turn at k=4 and then percentage error increases with further increase in 'k'. This behaviour can be as expected because when k=1,2,3 then we cannot classify a data element based on too few neighbours. But after k=4 the value increases since in that domain we get sufficient points from all the digit classes and thus cannot associate the data element to its corrent class.

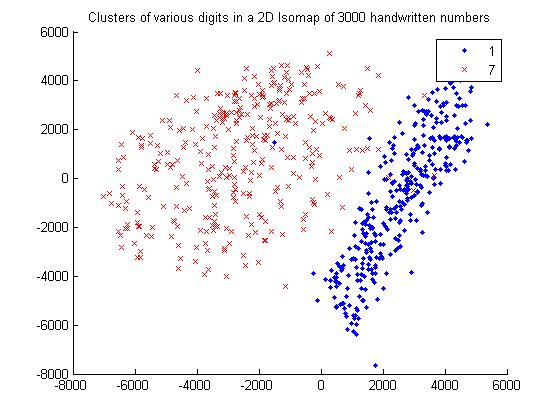

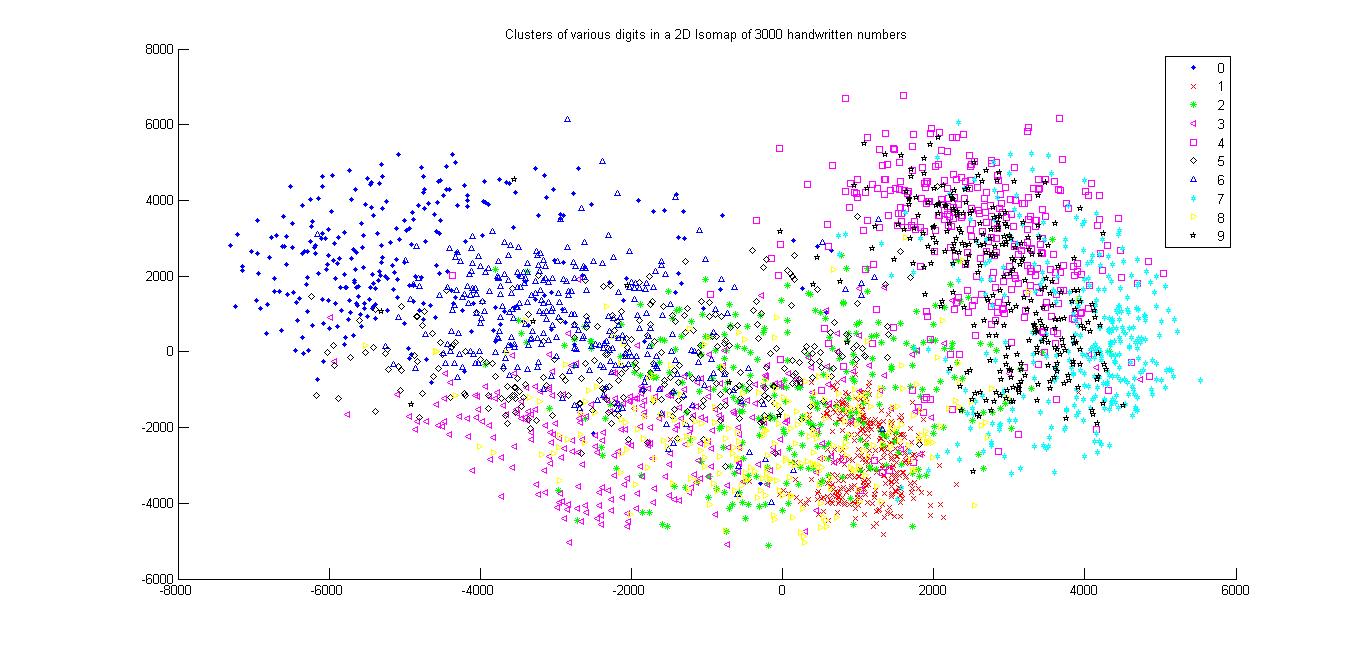

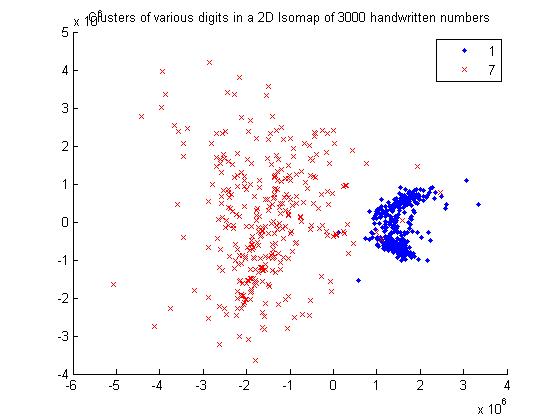

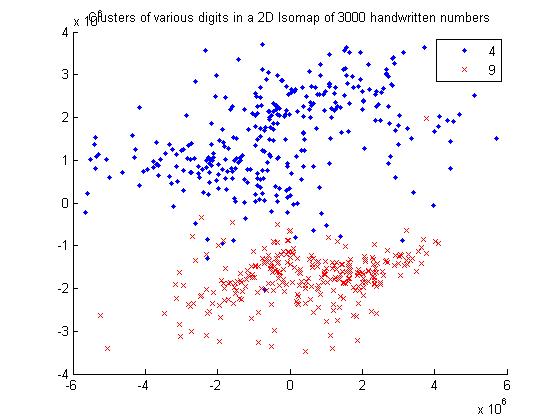

Cluster Diagram

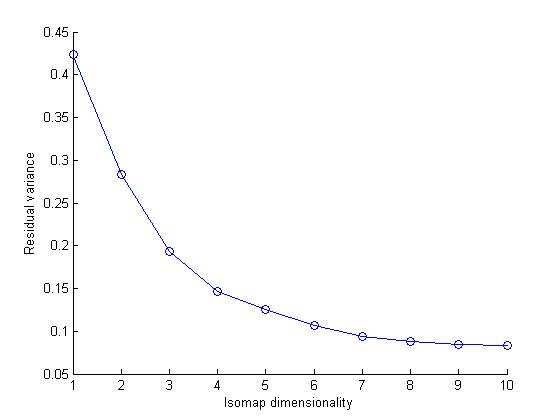

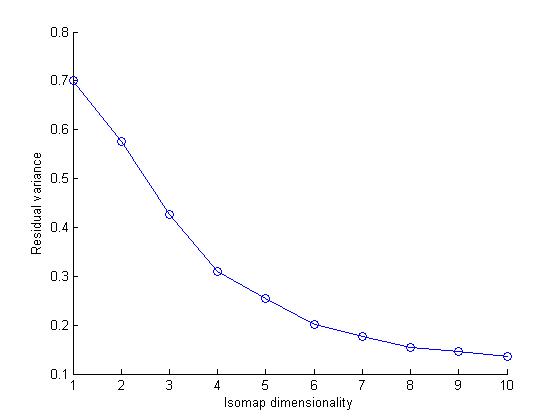

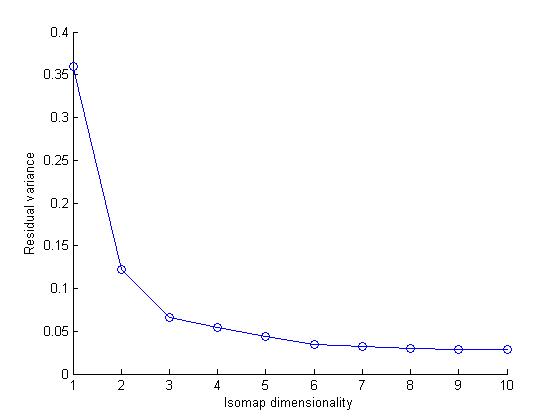

Residual Variance

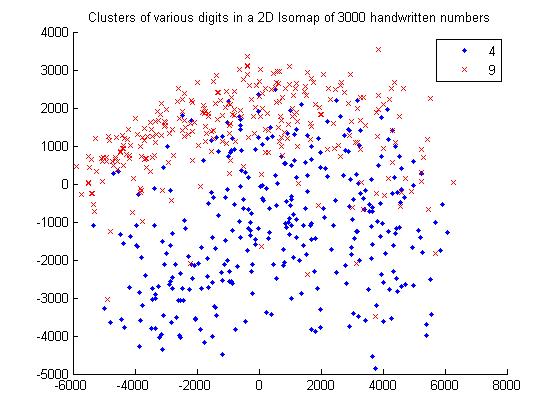

Cluster Diagram

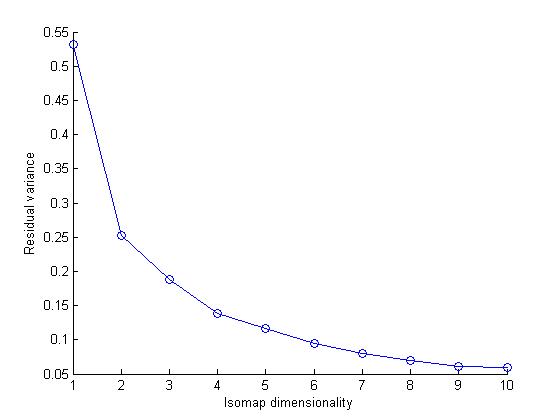

Residual Variance

Cluster Diagram

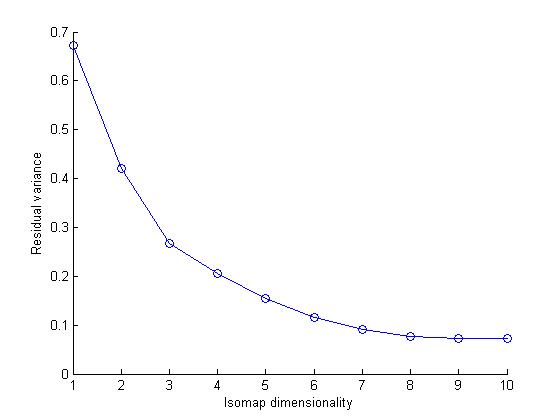

Residual Variance

Cluster Diagram

Residual Variance

Cluster Diagram

Residual Variance

Cluster Diagram

Residual Varianc

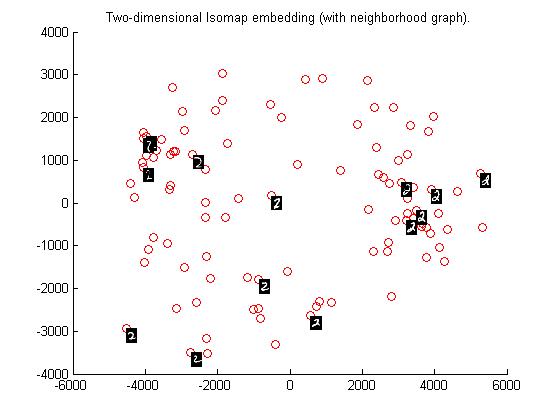

Euclidean distance applied to "2"'s images in database

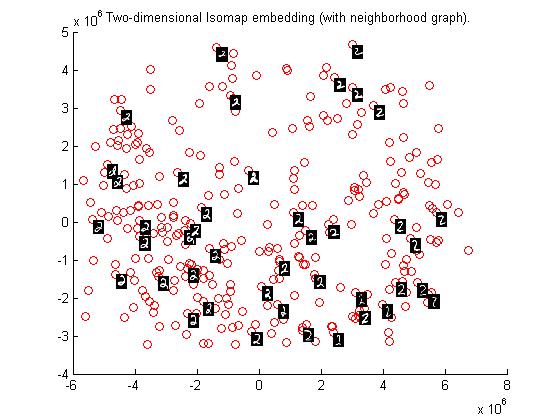

Tangent distance applied to "2"'s images in database

Isomap computes a low-dimensional embedding of a set of high-dimensional data points. The algorithm used in isomap provides a simple method for estimating the underlying geometry of a data manifold based on a rough estimate of each data points neighbors on the manifold.We can use different distance metric while computing an Isomap model of a dataset.In this assignment we user Eucledian and Tangent Distance metrics.

I noticed that the data set cluster becomes more dense as we change the distance metric from Eucledian to Tangetial.This is because the eucledian metric classifies a data point naively on the bases of sum of squares of pixel to pixel distance whereas tangent metric also considers the various rotational transformations of pixels.Clearly considering these transformations makes sens

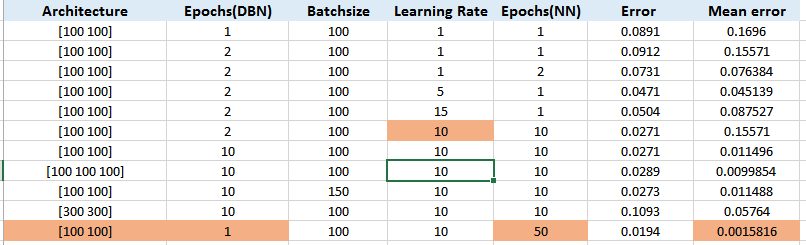

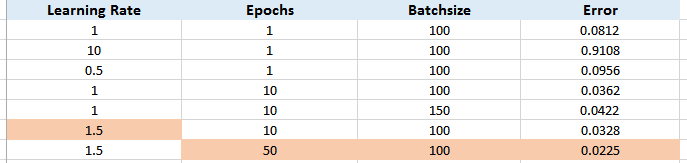

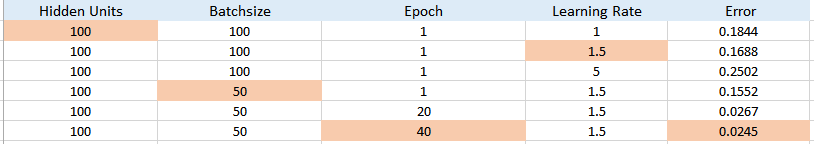

Training done of 60,000 images and testing data of size 10,000 images is used.

In deep learning learning is done in several phases.Each phase uses the information deduced from previous phases,thereby improving the classification or the representation of various features in each phase. Multiple levels of latent variables allow sharing of statistical strength.In deep learning the learning time and % error of the model depends on layer architectures and various parameters such as learning rate, epoch etc.We need to vary the parameters considering the results obtaines in the previous phases.Continuing this process we will finally reach the minimum error state eventually.

With increase in number of epochs(keeping other parameters same) the percentage error decreases but the time taken to perform the experiment increases. With increase in learning rate(keeping other parameters same) the percentage error increases but the time taken to perform the experiment decreases. Therefore, keeping the learning rate =1 and degree of network =2 and increasing the number of epochs could provide good results with decreasing error percentage See the orange coloured line in the table for the minimal error experiment done.(it follows the specifications of above point)