Homework 1

Generative-Discriminative Learning

This homework explores the power of generative models. It has three parts.- k-NN model (purely discriminative)

- Manifold model (purely generative)

- Deep learning (generative-discriminative)

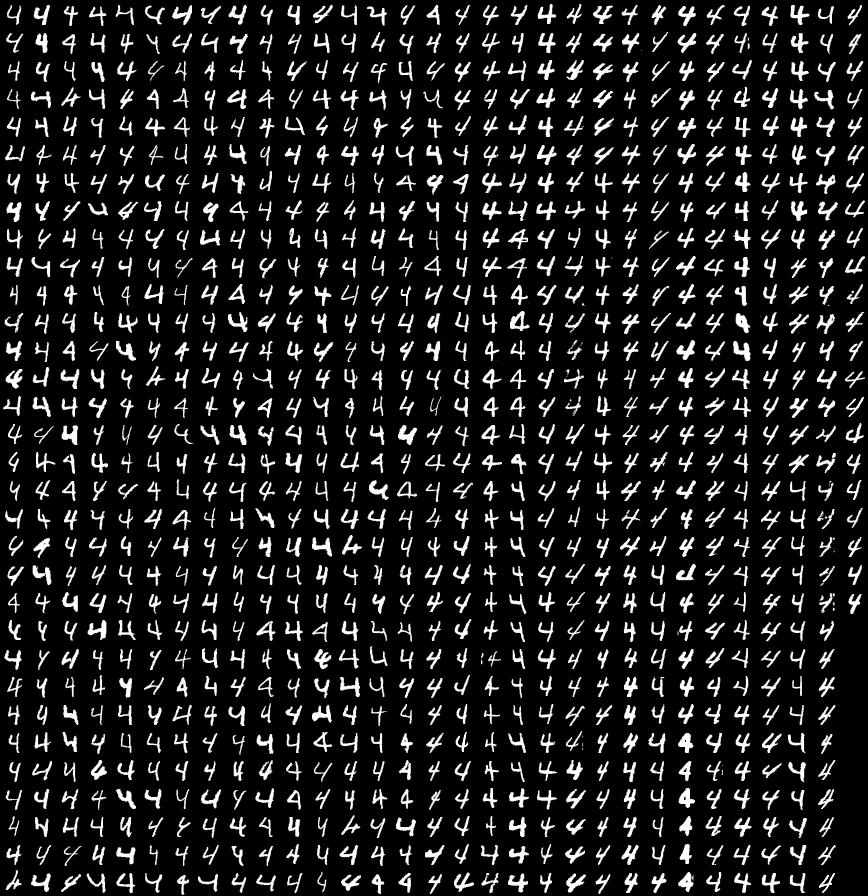

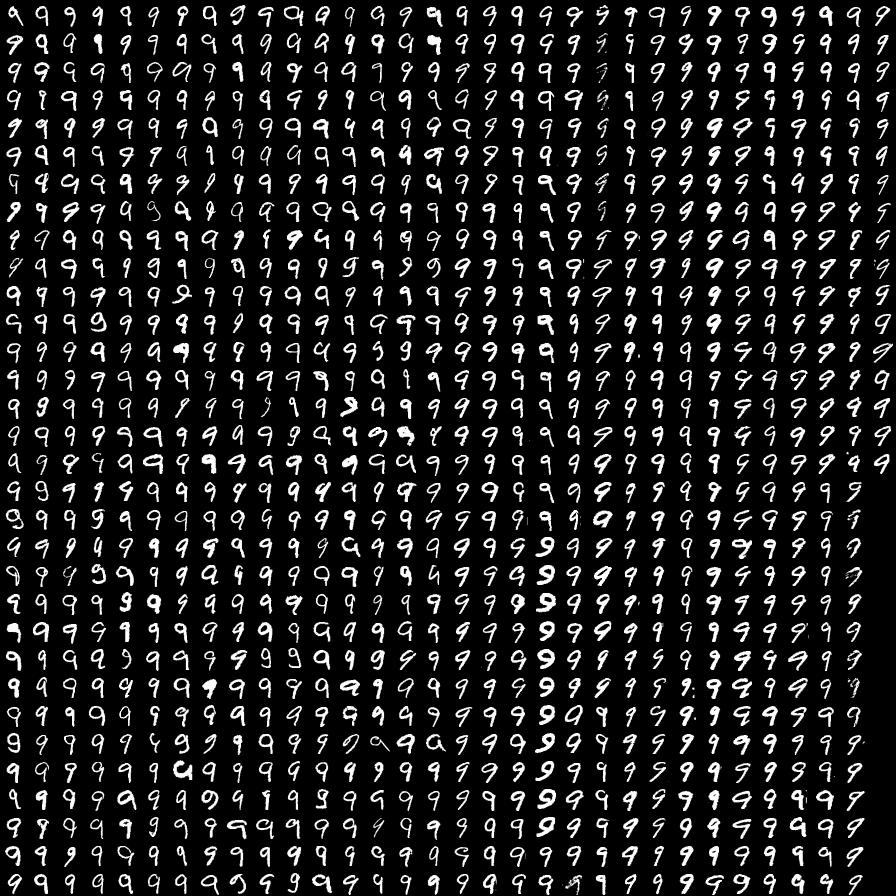

Handwritten numerals : MNIST dataset.

Some 4's and 9's from the MNIST dataset.

(Click the images to expand.)

A. k-NN based classifier

Write a simple k-NN based classifier using Matlab for classifying MNIST digit dataset. You may wish to use knnsearch. Experiment with the value of k and find the best k which reduces error on the test set. Report the results as a graph with 'k' value on x-axis and 'percentage error on test set' on y-axis.

B. Manifold based modeling of MNIST digits

In this problem we see how an imaging system may construct models for handwritten numerals. We will use 3000 images from the MNIST database, each of size 28x28 pixels. The images have labels indicating which digit it is.

You will now consider whether these images lie along some lower dimensional manifold in the 784-dimensional image space. The objective of this exercise is to learn the importance of a distance metric; we shall be considering both the plain euclidean distance, and the tangent distance metric.

- Construct the 2-D isomap model for the first 3000 examples in the dataset using euclidean

distance. Show clusters for a sample of the digits

- 1 and 7

- 4, and 9

- All the digits

- EXTRA CREDIT: Show some of the digits on the maps, as in the tenenbaum paper.2

- Construct the 2-D isomap model using tangent-distance. show the same clusters as above.

Resources for ISOMAP

- Information on the algorithm: Joshua B. Tenenbaum and Vin de Silva and John C. Langford, A Global Geometric Framework for Nonlinear Dimensionality Reduction 2 , Science, v. 290, pp. 2319--2323, 2001.

- You may wish to use the widely used Matlab implementation of Isomap by Tenenbaum et al. You will need just two files from this package: Isomap.m and L2_distance.m, and you can ignore the other files. See the Readme page to understand how to use these files. However, you may also use any C or Java code. If you adapt code from others, please give the link in your webpage.

- Source code in C [td.c] for computing tangent-distance. You will NOT have to use this file directly. Once it has been compiled using mex, you can use this Matlab code [tangent_d.m] to invoke it. The td.c file is a modified version of Daniel Keysers' tangent distance implementation to allow it to be invoked from Matlab. In case you want to implement the whole work in C, you can use the original pure C implementation by Daniel Keysers.

C. deep learning

In deep learning the accuracy, learning time of the model depends on the layer architectures and parameters such as learning rate, epoch etc. Use the Matlab Deep Learning Toolbox 1 and experiment with various layer architectures, parameters and try to improve the accuracy as best as you can. See the 'test' examples on how to use the Toolbox.

Resources for Deep Learning

Submission:

You must submit a single HTML page home.iitk.ac.in/~youruserid/cs365/hw1/index.html in the following format, which may link to different files under your userid.*Draw Graph showing the various values of k vs percentage error.

*Write your observations about data set based on the graph if any.

*Brief Isomap

*Draw graphs showing clusters for the sub-questions of 1) and 2)

*Write your observations based on the above graphs.

*Brief the procedure of the experiment you are running. i.e working of deep architectures.

*Show a table with the columns

1.Parameters you have chosen (like epoch, learning rate)

2.Architecture of the network (Ex: [200 100 50 10]

3.Percentage error on test set

4.Time to run experiment in minutes (optional)

Mark or color the best architecture and parameters you could come up with.

*Write your observations based on the above table.

Resources

Please put a link to the source code you have used (if you didn't use the code suggested in this page)

Evaluation: If you do everything stated in the assignment, you get 80% marks. The other 20% is reserved for particularly excellent explorations of any of the questions.

Due date: Friday 8th February, 5:00 PM.