CS365 Assignment 1:

Download Code

Results Obtained:

|

Agent Type |

Starting Point |

Number of moves |

Performance |

Dirt Cleaned |

|

|

|

|

|

|

|

A |

(3,5) |

10 |

34 |

1.8 |

|

|

|

|

45 |

2.7 |

|

|

|

|

23 |

0.4 |

|

|

|

|

34 |

1.1 |

|

|

|

|

34 |

2.1 |

|

|

Average |

34 |

1.62 |

|

|

|

|

50 |

82 |

4.7 |

|

|

|

|

5 |

2.2 |

|

|

|

|

137 |

6.9 |

|

|

|

|

82 |

4.7 |

|

|

|

|

82 |

5.2 |

|

|

Average |

77.6 |

4.74 |

|

|

|

(7,1) |

10 |

23 |

1.3 |

|

|

|

|

-10 |

0 |

|

|

|

|

-10 |

0 |

|

|

|

|

1 |

0.3 |

|

|

|

|

23 |

1.1 |

|

|

Average |

5.4 |

0.54 |

|

|

|

|

50 |

27 |

3 |

|

|

|

|

27 |

2.2 |

|

|

|

|

38 |

3.8 |

|

|

|

|

38 |

2.7 |

|

|

|

|

60 |

3.9 |

|

|

Average |

38 |

3.12 |

|

|

|

|

|

|

|

|

B |

(3,5) |

10 |

45 |

2.9 |

|

|

|

|

45 |

2.9 |

|

|

|

|

45 |

2.9 |

|

|

|

|

45 |

2.9 |

|

|

|

|

45 |

2.9 |

|

|

Average |

45 |

2.9 |

|

|

|

|

50 |

148 |

7.1 |

|

|

|

|

192 |

7.9 |

|

|

|

|

104 |

5.7 |

|

|

|

|

159 |

7.6 |

|

|

|

|

148 |

6.4 |

|

|

Average |

150.2 |

6.94 |

|

|

|

(7,1) |

10 |

23 |

2.1 |

|

|

|

|

23 |

1.2 |

|

|

|

|

12 |

1.3 |

|

|

|

|

23 |

1.8 |

|

|

|

|

1 |

0.5 |

|

|

Average |

16.4 |

1.38 |

|

|

|

|

50 |

126 |

6.5 |

|

|

|

|

137 |

7.4 |

|

|

|

|

104 |

6.3 |

|

|

|

|

137 |

7.5 |

|

|

|

|

115 |

7.0 |

|

|

Average |

123.8 |

6.94 |

|

|

|

|

|

|

|

|

C |

(3,5) |

10 |

45 |

2.8 |

|

|

|

|

45 |

2.8 |

|

|

|

|

45 |

2.8 |

|

|

|

|

45 |

2.8 |

|

|

|

|

45 |

2.8 |

|

|

Average |

45 |

2.8 |

|

|

|

|

50 |

126 |

6.2 |

|

|

|

|

148 |

7.1 |

|

|

|

|

170 |

8.1 |

|

|

|

|

192 |

8.3 |

|

|

|

|

170 |

8.0 |

|

|

Average |

161.2 |

7.54 |

|

|

|

(7,1) |

10 |

34 |

2.6 |

|

|

|

|

23 |

1.2 |

|

|

|

|

34 |

1.5 |

|

|

|

|

12 |

1.3 |

|

|

|

|

23 |

1.2 |

|

|

Average |

25.2 |

1.60 |

|

|

|

|

50 |

126 |

7.1 |

|

|

|

|

159 |

7.9 |

|

|

|

|

170 |

8.1 |

|

|

|

|

159 |

7.7 |

|

|

|

|

137 |

7.2 |

|

|

Average |

151.2 |

7.6 |

|

|

|

|

|

|

|

Parts A B C

Comparing the average performance of the agents we can clearly see C performs better than B and B performs better than A.

Agent A: Agent A perceives the value of dirt at its location and if there is a wall around it in any of the directions. Accordingly if its current location is dirty it cleans it and if not then it randomly chooses a possible direction.

Agent B: Agent B perceives the value of dirt at its and surrounding 4 locations and if there is a wall around it in any of the directions. Accordingly if its current location is dirty it cleans it and if not then it greedily chooses its next move. In case more than 2 locations have the maximum dirt then it randomly chooses a possible direction.

Agent C: Agent C perceives its location, the value of dirt at its and surrounding 4 locations and if there is a wall around it in any of the directions. It also has a memory to store information about squares already visited. If its current location is dirty it cleans it and updates its memory and if not then it chooses its next move.

To

decide the next move the agent sums the values of dirt at 3 squares in each

direction and finds the maximum for e.g. in up, right directions it analyzes

To

decide the next move the agent sums the values of dirt at 3 squares in each

direction and finds the maximum for e.g. in up, right directions it analyzes

The value of the other 2 squares can be obtained from agent’s memory if present otherwise a default value of 0.3 is assumed. If the agent finds same value in 2 directions then it finds the maximum out of immediate square in the 2 directions. If that also is same then the direction is chosen randomly out of those 2.

Part D

a) If we have an environment in which all actions performed by the agent are equally rewarding then the agent is perfectly rational. Or if the given environment is fully observable i.e. the present status is good enough to make a decision. Also any environment in which memory or state is not required to do well will make a reflex agent rational.

b) No it is not 100% deterministic. Consider a situation when all the 4 squares around the bot have same dirt value it will choose its next move randomly and hence will have different behavior in same situation.

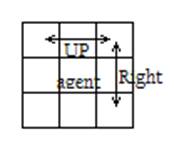

c) Randomized

agent will perform poorly in cases where the bot has choices to move but all choices

are not equally favorable for e.g. when the bot has unsymmetrical path

distribution like this:

d) The agent will have to keep cleaning the current location until it is remains dirty. Hence Suck action can be replaced by “Suck till the square is clean”. Also with an inaccuracy of 20% the dirt sensor can give a wrong value of the dirt on floor hence the agent should be made to wait for a few more readings to get a more accurate measurement before taking a decision. For example, if two successive readings give the same answer, the probability of its being incorrect reduces to 2%.The number of readings required will have to be judged by experimentation. Also the agent will have to go on checking the grid indefinitely in case it had missed some dirt earlier.

e) The agent will have to go on checking the grid indefinitely. The probability that a square is dirty increases with the time since it was last checked by the agent and hence the agent will have to execute the shortest possible tour of all squares repeatedly. Based on the variance between the dirt probabilities of different squares the agent should schedule the cleaning of different parts of the floor at different time intervals because a square is dirty only +/- 10 moves after it is cleaned.

Part E

a)

If we already know the color of the ground

and walls one possible method would be to identify the regions of those colors

in the image or assume that maximum region of same color is the floor. But that

would pose problems in case of light variations on floor for e.g. shadows.

Since we have low

perspective of the scene another method could be to try evaluating the likelihood of horizontal

intensity edge line segments belonging to the wall-floor boundary at the

top and sides of image.

b) Firstly identify the floor and determine the color of floor. Project the floor on 2D area. Divide the area of the floor into grid of 0.5m. Find the area of the dirt in each square of grid with the help of difference in color and assign the dirt values approximately by the percentage of area of a grid occupied by dirt.

c) Yes it can represent the fraction of dirt in each grid and hence is good enough.

d) With the help of number of grid it can see and knowing its camera angle the agent can find its position by determining its distance from the 3 walls and dividing it by grid size.