Introduction

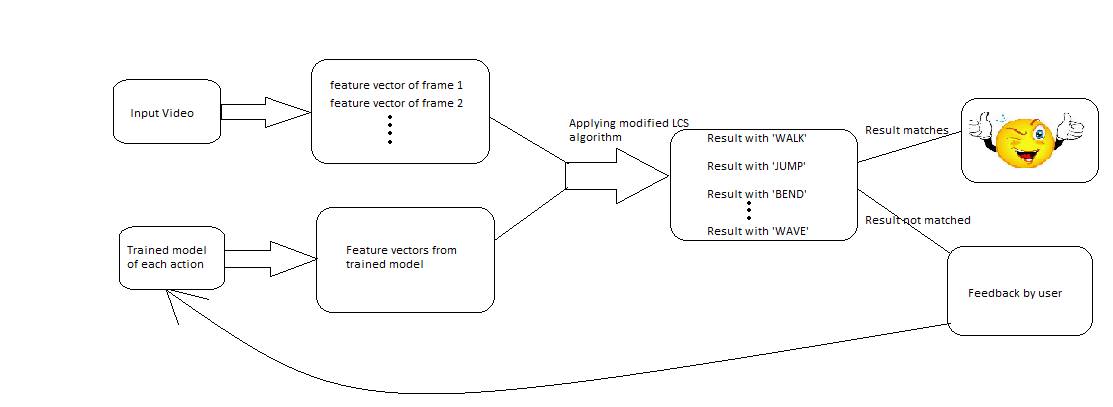

This work explains the implementation of a model which recognises an action of a human in a video by pose estimation of Articulated Human in static 2-D image. In our project the video is segmented into frames and then features are extracted from each frames. And finally using Modified LCS approach we output the action performed by the Individual.

Code and Other Resources

Related Work

Articulated pose Estimation With Flexible Mixtures of parts (By - Yi Yang, Deva Ramanan)[1] :

This paper describes a method for pose estimation in stationary images based on part models. In this method they have used a spring model as a human model and calculated a contextual correlation between the model parts. One way to visualize the model is a configuration of body parts interconnected by springs. The spring like connections allow for the variations in relative positions of parts with respect to each other. The amount of deformation in the springs acts as penalty (Cost of deformation). Most of the work done on action recognition from video requires RGB as well as Depth data to recognize the action.

An Approach to Pose based Action Recognition (Chunyu Wang, Yizhou Wang and Alan L. Yuille) [2] :

For representing human actions, it first group the estimated joints into five body parts namely Head, L/R Arm, L/R Leg. A dictionary of possible pose templates for each body parts is formed by clustering the poses of training data. For every Action class we distinguish some part sets ( Temporal and Spatial ) for representing the given action and then find the maximum intersection out of it.

This paper describes a method for pose estimation in stationary images based on part models. In this method they have used a spring model as a human model and calculated a contextual correlation between the model parts. One way to visualize the model is a configuration of body parts interconnected by springs. The spring like connections allow for the variations in relative positions of parts with respect to each other. The amount of deformation in the springs acts as penalty (Cost of deformation). Most of the work done on action recognition from video requires RGB as well as Depth data to recognize the action.

An Approach to Pose based Action Recognition (Chunyu Wang, Yizhou Wang and Alan L. Yuille) [2] :

For representing human actions, it first group the estimated joints into five body parts namely Head, L/R Arm, L/R Leg. A dictionary of possible pose templates for each body parts is formed by clustering the poses of training data. For every Action class we distinguish some part sets ( Temporal and Spatial ) for representing the given action and then find the maximum intersection out of it.

Our Approach

Part 1 : Labelling different body parts

We get 26 parts from the Prof.Deva Ramanan's code and we clustered them into 11 parts which are : Left Hand/Arm/Torso/Thigh/Leg, Right Hand/Arm/Torso/Thigh/Leg and Head. For clustering this part we first normalised the skeleton w.r.t Head-Neck length. This method helps us deal with the cases of difference in height, length of detected skeleton caused by the different shapes and sizes of individuals. After normalisation we used ‘Linear Regression’ method to estimate the 11 body parts described above.

Part 2: Action recognition from video

Feature Extraction : From above 11 parts, we use the set of 8 angles as the feature vector of one image/frame.

These angles are : Angles between : Left Hand and Arm, Left Arm and Torso, Left Torso and Left Thigh, Left Thigh and Left leg and similarly for Right body parts.

For training we used ‘i3DPost Multi-view Human Action Datasets'. For testing We used our own Approach : Modified LCS Algorithm

Feedback System: At the end of test video we will ask the user whether the result is consistent with the provided video or not, if not we ask user the correct action of that video and train our model on this new video accordingly.

These angles are : Angles between : Left Hand and Arm, Left Arm and Torso, Left Torso and Left Thigh, Left Thigh and Left leg and similarly for Right body parts.

For training we used ‘i3DPost Multi-view Human Action Datasets'. For testing We used our own Approach : Modified LCS Algorithm

Feedback System: At the end of test video we will ask the user whether the result is consistent with the provided video or not, if not we ask user the correct action of that video and train our model on this new video accordingly.

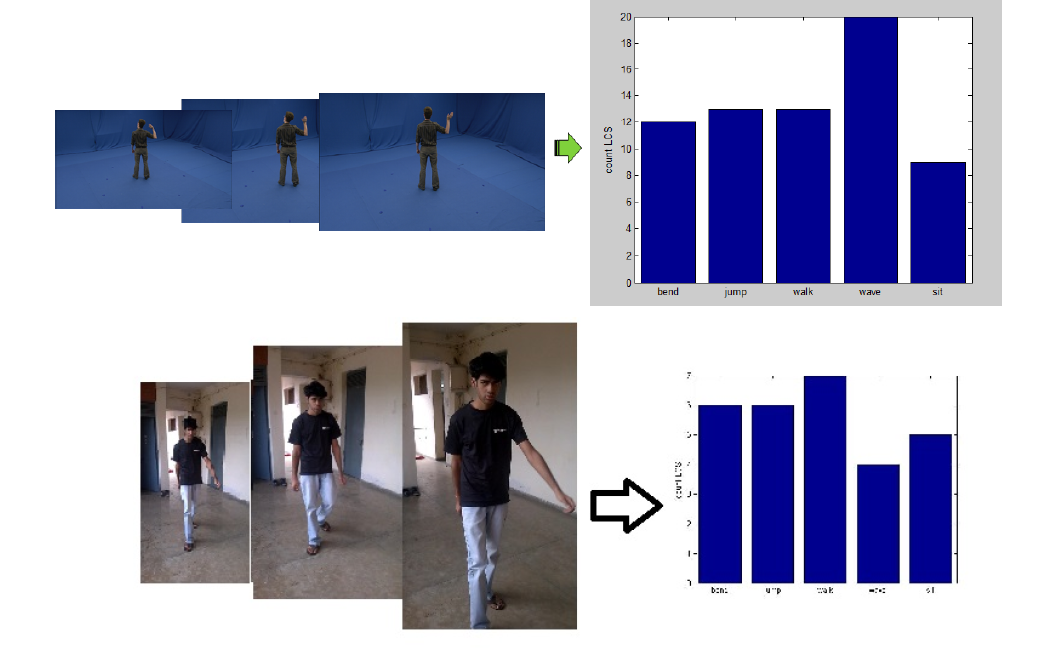

Result

Some of the screenshots are shown above for results and there was high accuracy in actions like wave,

walk and jump but some action classes like bend and sit were not much accurate. The reason behind this

was that, in bend and sit the skeleton was distorted and hence the extracted feature vector was not always

correct and there were lots of occlusions which lead to high penalty and lesser countLCS in our

implementation.

References

1. “Articulated pose estimation with flexible mixturesofparts”

Y Yang, D Ramanan Computer

Vision and Pattern Recognition (CVPR), 2011

2. “An approach to pose –based action recognition”

Chunyu Wang, Yizhou Wang, and Alan L. Yuille (CVPR), 2013

3. Code provided by D. Ramanan :

http://www.ics.uci.edu/~dramanan/software/pose/

4. i3DPost Multiview Human Action Datasets:

http://kahlan.eps.surrey.ac.uk/i3dpost_action/

5. Human Action Recognition Using Star Skeleton

HsuanSheng Chen, HuaTsung Chen, YiWen Chen and SuhYin Lee VSSN '06

Y Yang, D Ramanan Computer

Vision and Pattern Recognition (CVPR), 2011

2. “An approach to pose –based action recognition”

Chunyu Wang, Yizhou Wang, and Alan L. Yuille (CVPR), 2013

3. Code provided by D. Ramanan :

http://www.ics.uci.edu/~dramanan/software/pose/

4. i3DPost Multiview Human Action Datasets:

http://kahlan.eps.surrey.ac.uk/i3dpost_action/

5. Human Action Recognition Using Star Skeleton

HsuanSheng Chen, HuaTsung Chen, YiWen Chen and SuhYin Lee VSSN '06